Mellanox MQM9790-NS2F 64-Port 400Gb/s InfiniBand Switch | Externally Managed for UFM Software-Defined Fabrics

Product Details:

| Brand Name: | Mellanox |

| Model Number: | MQM9790-NS2F(920-9B210-00FN-0D0) |

| Document: | MQM9700 series.pdf |

Payment & Shipping Terms:

| Minimum Order Quantity: | 1pcs |

|---|---|

| Price: | Negotiate |

| Packaging Details: | outer box |

| Delivery Time: | Based on inventory |

| Payment Terms: | T/T |

| Supply Ability: | Supply by project/batch |

|

Detail Information |

|||

| Application: | Server | Condition: | New And Original |

|---|---|---|---|

| Port Configuration: | 64×400Gb/s | Airflow Direction: | Front To Rear |

| Keyword: | Mellanox Network Switch | Name: | Mellanox Network Switch Mellanox High Speed MQM9790-NS2F Networking Switches New |

Product Description

Engineered for centralized, large-scale data center management, the MQM9790-NS2F is a 64-port 400Gb/s InfiniBand switch offering unparalleled fabric control and scalability. As a key component of the NVIDIA Quantum-2 platform, this high-performance network switch is designed to be seamlessly integrated into unified fabric management systems, enabling operators to efficiently provision, monitor, and optimize modern AI and HPC infrastructures.

- Centralized Fabric Management: Optimized for use with NVIDIA UFM® software, enabling holistic control of large-scale deployments.

- Maximized Port Density: Delivers 64 non-blocking 400Gb/s ports (51.2 Tb/s aggregate throughput) in a 1U form factor.

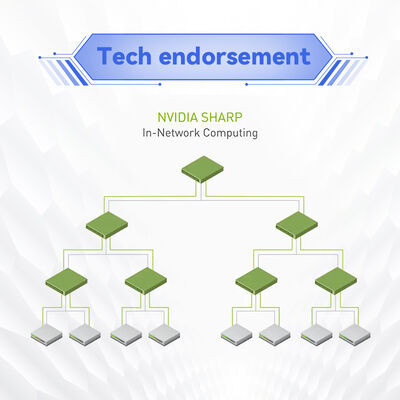

- In-Network Compute Acceleration: Incorporates 3rd-gen NVIDIA SHARPv3 technology to dramatically boost AI and HPC application performance.

- Topology Agnostic: Supports a wide array of fabric designs including Fat Tree, DragonFly+, and multi-dimensional Torus.

- High Reliability: Features hot-swappable, redundant power supplies and fan units for continuous operation.

- Operational Efficiency: Enables preventative troubleshooting and higher fabric utilization through advanced external management.

The MQM9790 series is built on the NVIDIA Quantum-2 platform, leveraging NDR 400G InfiniBand technology. Its architecture is designed to offload complex operations from servers into the network fabric, featuring:

- SHARPv3 (Scalable Hierarchical Aggregation and Reduction Protocol) for in-network computation, reducing data movement.

- Native support for Remote Direct Memory Access (RDMA) and adaptive routing.

- Port-Split Capability to configure 128 ports at 200Gb/s, providing deployment flexibility.

- Designed for integration with NVIDIA Unified Fabric Manager (UFM®) for end-to-end software-defined fabric management.

This externally managed network switch is ideal for large-scale, centrally controlled environments:

- Enterprise AI & ML Clusters: Building scalable, manageable fabrics for distributed training workloads.

- Hyperscale & Cloud Data Centers: Deploying as a managed fabric element under a single pane-of-glass.

- Large-Scale HPC Facilities: Where centralized monitoring, provisioning, and maintenance of thousands of nodes is critical.

- Research & Government Labs: Requiring robust, software-defined infrastructure for complex simulations.

- Managed Service Providers: Offering high-performance networking infrastructure with advanced oversight capabilities.

| Attribute | Specification for MQM9790-NS2F |

|---|---|

| Product Model | MQM9790-NS2F (Externally Managed) |

| Form Factor | 1U Rack Mount |

| Port Configuration | 64 ports of 400Gb/s InfiniBand |

| Switch Radix | 64 (Non-Blocking) |

| Aggregate Throughput | 51.2 Tb/s (bidirectional) |

| Connectors | 32 x OSFP (supports DAC, AOC, optical modules) |

| Management | Designed for NVIDIA UFM®; No on-board subnet manager |

| Airflow | Power-to-Connector (P2C, Forward) |

| Power Supply | 1+1 Redundant, Hot-Swappable (200-240V AC) |

| Dimensions (HxWxD) | 1.7" x 17.0" x 26.0" (43.6mm x 438mm x 660.4mm) |

| Operating Temperature | 0°C to 40°C (32°F to 104°F) |

- Unified Fabric Control: Part of a software-defined ecosystem with NVIDIA UFM®, enabling automation, advanced analytics, and proactive health monitoring.

- Optimized for Scale: Removes the overhead of distributed subnet management, ideal for fabrics exceeding thousands of nodes.

- Full Quantum-2 Performance: Delivers the same industry-leading 400G density and SHARPv3 acceleration as the managed MQM9700 series.

- Reduced Operational Complexity: Centralized management streamlines provisioning, troubleshooting, and firmware updates across the entire fabric.

- Future-Proof Investment: Backward compatibility and support for evolving topology standards protect infrastructure investments.

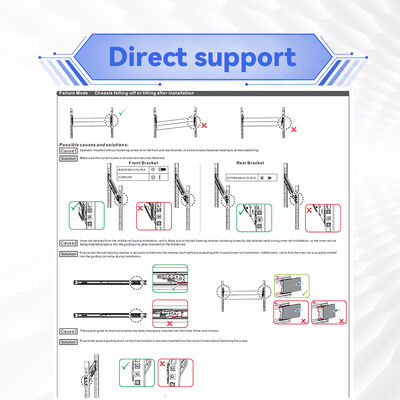

We provide comprehensive support for your MQM9790-NS2F deployment and broader network infrastructure needs:

- Warranty: Backed by a standard 1-year manufacturer warranty. Inquire about tailored extended support plans.

- Expert Integration Support: Our technical team can assist with UFM® integration, fabric design, and validation.

- Rapid Supply Chain: Access to a $10 million inventory ensures availability of the MQM9790-NS2F and compatible network card and cabling.

- 24/7 Technical Assistance: Round-the-clock customer service and technical consultation for urgent operational issues.

Q: What is the primary difference between MQM9790-NS2F and MQM9700-NS2F?

A: The key difference is management. The MQM9790-NS2F lacks an on-board subnet manager and is designed to be controlled externally by NVIDIA UFM® software, making it ideal for large, centralized fabrics. The MQM9700-NS2F includes an internal subnet manager for standalone or smaller cluster use.

Q: Is NVIDIA UFM® software required to operate this switch?

A: For full functionality and fabric management, yes. The MQM9790 series is optimized for and typically deployed with NVIDIA UFM® or a compatible external fabric management platform.

Q: Can it connect to servers with older InfiniBand network card (NIC card)?

A: Absolutely. The switch supports backward compatibility with HDR (200G), EDR (100G), and FDR (56G) InfiniBand generations via appropriate cables or adapters.

Q: Does it support port splitting for 200Gb/s connectivity?

A: Yes. Using NVIDIA's port-split technology, each 400G port can be configured as two independent 200G ports, effectively offering up to 128 ports of 200Gb/s.

- Management Dependency: Ensure you have a licensed and configured NVIDIA UFM® instance or compatible management platform before deployment.

- Fabric Planning: Successful integration requires careful fabric design, including SM (Subnet Manager) placement and link configuration.

- Hardware Compatibility: Verify that your servers are equipped with compatible NDR or HDR/EDR InfiniBand network card (NIC card) and that cabling meets the 400G specification.

- Environmental Compliance: Install within specified operating temperature (0°C-40°C) and humidity ranges. Ensure the P2C airflow direction matches your data center's cooling scheme.

- Certification & Safety: The product carries CE, FCC, RoHS, and other key certifications. Installation should be performed by qualified personnel following data center best practices.

With over a decade of industry experience, we have established ourselves as a leading provider of premium network hardware solutions. Our foundation is built on a large-scale operational facility, a robust in-house technical team, and a vast inventory valued at over $10 million.

We are authorized partners for top-tier brands including NVIDIA (Mellanox), Ruckus, Aruba, and Extreme Networks. Our core portfolio encompasses original, brand-new equipment such as high-performance network switch systems like the MQM9790, network card (NIC card), wireless access points, controllers, and structured cabling solutions.