NVIDIA MQM9700-NS2F 64-Port 400Gb/s InfiniBand Switch with SHARPv3 Technology in 1U Form Factor

Product Details:

| Brand Name: | Mellanox |

| Model Number: | MQM9700-NS2F(920-9B210-00FN-0M0) |

| Document: | MQM9700 series.pdf |

Payment & Shipping Terms:

| Minimum Order Quantity: | 1pcs |

|---|---|

| Price: | Negotiate |

| Packaging Details: | outer box |

| Delivery Time: | Based on inventory |

| Payment Terms: | T/T |

| Supply Ability: | Supply by project/batch |

|

Detail Information |

|||

| Model Number: | MQM9700-NS2F(920-9B210-00FN-0M0) | Brand: | Mellanox |

|---|---|---|---|

| Uplink Connectivity: | 400 Gbps | Name: | InfiniBand MQM9700-NS2F Mellanox Network Switch 1U NDR 400Gb/S For Server |

| Keyword: | Mellanox Network Switch | Port Configuration: | 64x 400G, 32x OSFP |

| Highlight: | 64-Port 400Gb/s InfiniBand Switch,SHARPv3 Technology Network Switch,1U Form Factor MQM9700-NS2F |

||

Product Description

Engineered for the most demanding high-performance computing (HPC) and artificial intelligence (AI) workloads, the MQM9700-NS2F is a fixed-configuration, 64-port 400Gb/s InfiniBand switch delivering unprecedented throughput and scalability in a 1U form factor. This network switch is a cornerstone for building extreme-scale, low-latency data center fabrics, accelerating research and innovation by moving computation into the network itself.

- Unmatched Density & Performance: 64 non-blocking 400Gb/s ports (aggregate 51.2 Tb/s bidirectional throughput).

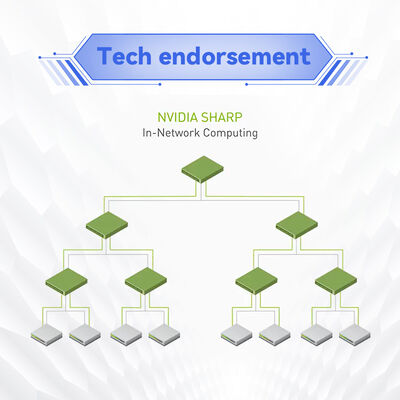

- In-Network Computing: Features 3rd-gen NVIDIA SHARPv3 technology, accelerating collective operations by up to 32X.

- Ultra-Low Latency: Designed for real-time data exchange in highly parallelized algorithms.

- Topology Flexibility: Supports Fat Tree, DragonFly+, SlimFly, and multi-dimensional Torus topologies.

- High Availability: Hot-swappable, redundant power supplies and fan units for continuous operation.

- Advanced Manageability: On-board subnet manager for out-of-the-box deployment of up to 2,000 nodes.

The MQM9700 series leverages the full NVIDIA Quantum-2 platform stack. It is built upon NDR 400G InfiniBand technology, integrating advanced in-network computing via SHARPv3 (Scalable Hierarchical Aggregation and Reduction Protocol). Key supported technologies include:

- Remote Direct Memory Access (RDMA) for CPU bypass and ultra-efficient data movement.

- Adaptive Routing and congestion control for optimized network utilization.

- Port-Split Technology enabling 128 ports of 200Gb/s, offering cost-effective topology design.

- MLNX-OS for comprehensive switch management via CLI, WebUI, SNMP, and JSON-RPC.

This high-performance network switch is ideal for:

- AI/ML Training Clusters: Facilitating fast GPU-to-GPU communication for large-scale model training.

- High-Performance Computing (HPC): Accelerating scientific simulations, genomic research, and financial modeling.

- Hyperscale Data Centers: As a high-density Top-of-Rack (ToR) or spine switch in scalable fabrics.

- Cloud Infrastructure: Building high-throughput, low-latency private or public cloud backbones.

- High-Frequency Trading & Real-Time Analytics: Where microsecond latencies are critical.

| Attribute | Specification for MQM9700-NS2F |

|---|---|

| Product Model | MQM9700-NS2F (Managed) |

| Form Factor | 1U Rack Mount |

| Port Configuration | 64 ports of 400Gb/s InfiniBand |

| Switch Radix | 64 (Non-Blocking) |

| Aggregate Throughput | 51.2 Tb/s (bidirectional) |

| Connectors | 32 x OSFP (supports DAC, AOC, optical modules) |

| Power Supply | 1+1 Redundant, Hot-Swappable (200-240V AC) |

| Airflow | Power-to-Connector (P2C, Forward) |

| Management | On-board Subnet Manager, MLNX-OS (CLI, WebUI, SNMP) |

| Dimensions (HxWxD) | 1.7" x 17.0" x 26.0" (43.6mm x 438mm x 660.4mm) |

| Weight | 14.5 kg |

| Operating Temperature | 0°C to 40°C (32°F to 104°F) |

- Industry-Leading Performance: Highest port density and throughput per 1U, reducing data center footprint and cost per gigabit.

- SHARPv3 In-Network Compute: Dramatically reduces data movement, accelerating AI and HPC applications directly within the network switch fabric.

- Future-Proof Design: Backward compatible with previous InfiniBand generations and supports flexible port splitting for 200Gb/s deployment.

- Resilient & Smart Fabric: Built-in self-healing, adaptive routing, and advanced congestion control ensure maximum application performance.

- Comprehensive Ecosystem: Supported by NVIDIA's UFM fabric management platform for end-to-end visibility and control.

We stand behind every MQM9700 unit we supply. Our commitment includes:

- Warranty: Standard 1-year manufacturer's warranty. Extended warranties available.

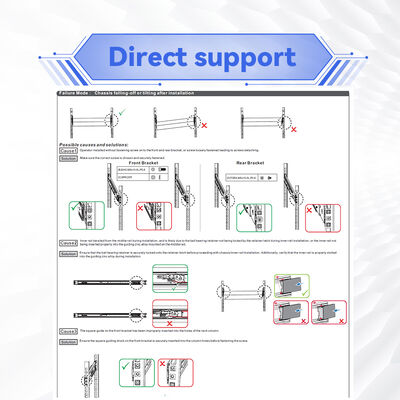

- Technical Support: 24/7 customer service and technical consultation via phone and email.

- Fast Delivery: Leverage our $10 million inventory for prompt shipment of the MQM9700-NS2F and related products like network card and cables.

- Integration Services: Our expert technical team can assist with network design, deployment, and optimization.

Q: What is the difference between the MQM9700-NS2F and MQM9790-NS2F models?

A: The MQM9700-NS2F is an internally managed switch with an on-board subnet manager, ideal for standalone or small cluster deployment. The MQM9790 is unmanaged and designed to be controlled by an external fabric manager like NVIDIA UFM for large-scale data centers.

Q: Can this switch connect to existing 200Gb/s or 100Gb/s InfiniBand equipment?

A: Yes. The MQM9700 series is backward compatible. Using appropriate cables or adapters, it can connect to NDR, HDR, EDR, and FDR generation network card and switches.

Q: Does it support Ethernet protocols?

A: No. This is a native InfiniBand switch. For converged Ethernet/InfiniBand solutions, please inquire about our NVIDIA Spectrum-X offerings.

Q: What type of cables are required?

A: It supports OSFP-based Direct Attach Copper (DAC), Active Optical Cables (AOC), and standard optical transceivers. Our team can help you select the correct cabling for your distance and budget requirements.

- Compatibility: Ensure your host servers are equipped with compatible NDR 400G or HDR/EDR InfiniBand network card (NIC card).

- Environmental: Adhere to specified operating temperature (0°C-40°C) and humidity (10%-85% non-condensing) ranges for optimal performance and longevity.

- Airflow & Rack Planning: Confirm your data center's cooling scheme matches the switch's airflow direction (P2C for this model). Ensure adequate clearance for hot-swappable components.

- Safety & Compliance: The product is certified for CE, FCC, RoHS, and cTUVus standards. Installation should be performed by qualified personnel.

- Software/Firmware: For the latest features and security, plan to update to the recommended MLNX-OS version upon deployment.

With over a decade of industry experience, we have established ourselves as a leading provider of premium network hardware solutions. Our foundation is built on a large-scale operational facility, a robust in-house technical team, and a vast inventory valued at over $10 million.

We are authorized partners for top-tier brands including NVIDIA (Mellanox), Ruckus, Aruba, and Extreme Networks. Our core portfolio encompasses original, brand-new equipment such as high-performance network switch systems, network card (NIC card), wireless access points, controllers, and structured cabling solutions.