MCX653106A-ECAT Mellanox 100gb Nic ConnectX- 6 VPI Hdr100 Edr Ib Dual Port

Product Details:

| Brand Name: | Mellanox |

| Model Number: | MCX653106A-ECAT |

| Document: | connectx-6-infiniband.pdf |

Payment & Shipping Terms:

| Minimum Order Quantity: | 1pcs |

|---|---|

| Price: | Negotiate |

| Packaging Details: | outer box |

| Delivery Time: | Based on inventory |

| Payment Terms: | T/T |

| Supply Ability: | Supply by project/batch |

|

Detail Information |

|||

| Products Status: | Stock | Application: | Server |

|---|---|---|---|

| Interface Type:: | InfiniBand | Ports: | Dual |

| Max Speed: | 100GbE | Type: | Wired |

| Condition: | New And Original | Warranty Time: | 1 Year |

| Model: | MCX653106A-ECAT | Name: | MCX653106A-ECAT Mellanox 100gb Nic ConnectX- 6 VPI Hdr100 Edr Ib Dual Port |

| Keyword: | Mellanox Network Card | ||

| Highlight: | MCX653106A-ECAT mellanox nic card,MCX653106A-ECAT mellanox 100gb nic,Dual Port mellanox 100gb nic |

||

Product Description

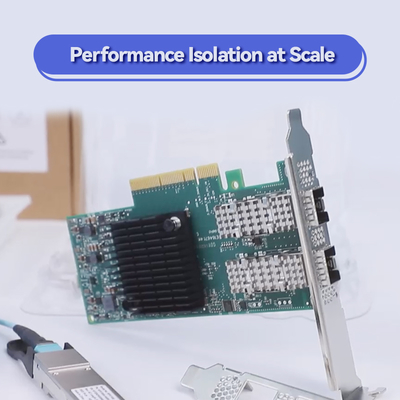

NVIDIA ConnectX-6 MCX653106A-ECAT InfiniBand Smart Network Adapter

The NVIDIA ConnectX-6 MCX653106A-ECAT is a high-performance dual-port InfiniBand smart network card delivering up to 200Gb/s per port. Designed for data-intensive environments, it features in-network computing, hardware offloads, and advanced security with block-level encryption. Ideal for HPC, AI, machine learning, and cloud data centers, this NIC card reduces CPU overhead and latency while maximizing throughput and scalability.

Key Features:

- Dual-port 200Gb/s InfiniBand and Ethernet connectivity

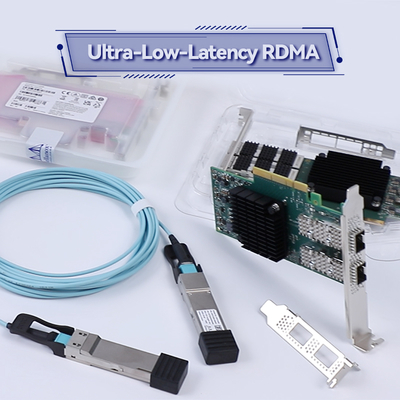

- Hardware offloads for RDMA, NVMe over Fabrics, and encryption

- In-network computing acceleration for improved efficiency

- Low latency and high message rate (215 million messages/sec)

- RoCE, SR-IOV, and ASAP² for virtualization and overlay networks

Technology

The ConnectX-6 NIC card leverages cutting-edge technologies including InfiniBand HDR, PCIe Gen 4.0, and hardware-based offloads for networking, storage, and security. It supports RDMA over Converged Ethernet (RoCE), TCP/UDP/IP offload, and NVIDIA GPUDirect® for GPU-to-GPU communication. The adapter also features NVIDIA Socket Direct technology for multi-socket server optimization.

How It Works

The ConnectX-6 smart NIC offloads network, storage, and security processing from the host CPU. Using integrated processors, it handles packet processing, encryption, and virtualization tasks directly on the card. RDMA capabilities allow direct memory access between systems, reducing latency and CPU usage. In-network computing engines further accelerate collective operations and data transport.

Applications

- High-Performance Computing (HPC) clusters

- Artificial Intelligence and Deep Learning training

- Hyperscale Cloud Data Centers

- Machine Learning and Big Data analytics

- Virtualized and NFV environments

- High-frequency trading and financial modeling

Specifications

| Attribute | Value |

|---|---|

| Model | MCX653106A-ECAT |

| Ports | 2 x QSFP56 |

| Max Speed | 200 Gb/s per port |

| Host Interface | PCIe Gen 3.0/4.0 x16 |

| Protocols | InfiniBand, Ethernet |

| RDMA Support | Yes (RoCE v2) |

| Encryption | XTS-AES 256/512-bit |

| Virtualization | SR-IOV (up to 1K VFs) |

| OS Support | Linux, Windows, VMware, FreeBSD |

| Form Factor | Low-profile PCIe |

Advantages

- Superior throughput and lower latency compared to previous generations

- Hardware offloading reduces CPU utilization and power consumption

- Enhanced security with inline encryption and FIPS compliance

- Support for both InfiniBand and Ethernet in one card

- Ideal for GPU-driven and AI workloads with GPUDirect RDMA

Service & Support

We offer comprehensive technical support, including configuration assistance, driver installation, and compatibility guidance. All products come with a standard warranty. Bulk order discounts are available. We ensure fast delivery and ongoing customer service.

FAQ

Q: What is the difference between ConnectX-6 and ConnectX-5?

A: The ConnectX-6 offers higher bandwidth (200Gb/s vs 100Gb/s), improved offload capabilities, and enhanced in-network computing.

Q: Does this NIC card support Ethernet?

A: Yes, it supports both InfiniBand and Ethernet protocols up to 200Gb/s.

Q: Is RoCE supported?

A: Yes, RDMA over Converged Ethernet (RoCE) is fully supported.

Q: Can I use this card in a VMware environment?

A: Yes, it is compatible with VMware vSphere and supports SR-IOV.

Q: What cables are required?

A: QSFP56 optical or DAC cables are supported for 200Gb/s connectivity.

Precautions

- Ensure proper cooling and airflow in the server chassis.

- Verify PCIe slot compatibility (PCIe Gen3/4 x16 required).

- Use certified cables and transceivers for optimal performance.

- Update to the latest firmware and drivers from NVIDIA.

- Follow ESD safety procedures during installation.

Company Introduction

With over a decade of industry experience, our company operates a large-scale facility supported by a robust technical team. We have built a strong customer base and extensive expertise, enabling us to deliver high-quality networking products at competitive prices. Our portfolio includes leading brands such as Mellanox, Ruckus, Aruba, and Extreme. We supply a wide range of products including network switches, network cards, wireless access points, wireless controllers, and cables. Our inventory exceeds 10 million units, ensuring ample selection and volume supply capabilities. We provide 24/7 customer consultation and technical support, backed by a professional sales and engineering team that has earned a distinguished reputation in the global market.