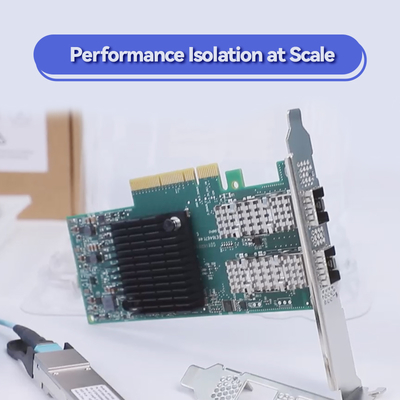

Nvidia Connectx-7 Adapter MCX75310aas-Neat (900-9X766-003N-SQ0) Single-Port Osfp Infiniband: Ndr 400GB/S (Default speed) Ethernet: 400gbe

Product Details:

| Brand Name: | Mellanox |

| Model Number: | MCX75310AAS-NEAT(900-9X766-003N-SQ0) |

| Document: | Connectx-7 infiniband.pdf |

Payment & Shipping Terms:

| Minimum Order Quantity: | 1pcs |

|---|---|

| Price: | Negotiate |

| Packaging Details: | outer box |

| Delivery Time: | Based on inventory |

| Payment Terms: | T/T |

| Supply Ability: | Supply by project/batch |

|

Detail Information |

|||

| Model NO.: | MCX75310AAS-NEAT(900-9X766-003N-SQ0) | Ports: | Single-Port |

|---|---|---|---|

| Technology: | Infiniband | Interface Type: | Osfp56 |

| Specification: | 16.7cm X 6.9cm | Origin: | India / Israel / China |

| Transmission Rate: | 400gbe | Host Interface: | Gen3 X16 |

| Highlight: | Single Port 900-9X766-003N-SQ0 Adapter,Nvidia 900-9X766-003N-SQ0 Adapter,400Gbe 900-9X766-003N-SQ0 Adapter |

||

Product Description

NVIDIA ConnectX-7 MCX755106AS-HEAT 400G InfiniBand/Ethernet Network Adapter

1. Product Overview

The NVIDIA ConnectX-7 MCX755106AS-HEAT (P/N: 900-9X7AH-0078-DTZ) is a high-performance dual-port network card supporting both InfiniBand NDR 400G and Ethernet 400GbE protocols. Designed for modern data centers, this nic card delivers ultra-low latency, hardware-accelerated security, and advanced in-network computing capabilities, making it ideal for AI, machine learning, high-performance computing (HPC), and hyperscale cloud environments.

2. Key Characteristics

- Dual-port 400G InfiniBand and Ethernet support

- Hardware offload for RDMA, RoCE, GPUDirect® Storage, and GPUDirect RDMA

- In-line encryption for IPsec, TLS, and MACsec

- Support for NVMe over Fabrics (NVMe-oF) and TCP

- Precision timing with IEEE 1588 PTP and SyncE

- SR-IOV and Multi-Host support

- Compliant with InfiniBand Trade Association 1.5 specifications

3. Core Technologies

The ConnectX-7 nic card incorporates cutting-edge technologies including:

- Remote Direct Memory Access (RDMA) over Converged Ethernet (RoCE)

- NVIDIA Scalable Hierarchical Aggregation and Reduction Protocol (SHARP™)

- ASAP² Accelerated Switch and Packet Processing

- Programmable flexible parser and connection tracking

- On-demand paging (ODP) and user-mode registration (UMR)

4. Working Principle

The ConnectX-7 network card operates by offloading network, storage, and security processing from the CPU to dedicated hardware engines. It uses RDMA to enable direct memory access between servers and GPUs, reducing latency and CPU overhead. In-network computing engines accelerate collective operations and data reduction, while inline encryption engines secure data without sacrificing performance.

5. Applications

- Artificial Intelligence and Deep Learning Training

- High-Performance Computing (HPC) Clusters

- Cloud Data Centers and Hyper-Scale Infrastructure

- Storage Area Networks (SAN) and NVMe-oF Storage

- Financial Trading and Real-Time Analytics

6. Specifications

| Attribute | Value |

|---|---|

| Model Number | MCX755106AS-HEAT (900-9X7AH-0078-DTZ) |

| Ports | 2 |

| Speed | Up to 400Gb/s per port |

| Protocols | InfiniBand (NDR/HDR/EDR), Ethernet |

| Host Interface | PCIe Gen5 x16 |

| Form Factor | FHFL (Full Height, Full Length) |

| Security | IPsec, TLS, MACsec, Secure Boot |

| Compatibility | Linux, Windows, VMware, Kubernetes |

7. Advantages & Selling Points

- Industry-leading 400G throughput with ultra-low latency

- Hardware offloads reduce CPU utilization by up to 50% compared to software solutions

- Enhanced RoCE and InfiniBand support for lossless networks

- Superior scalability with support for 16 million IO channels

- Advanced timing synchronization for financial and real-time applications

8. Service & Support

We provide a 5-year limited warranty, global technical support, and next-business-day advance replacement (region-dependent). Custom configuration and integration services are available for large-scale deployments.

9. Frequently Asked Questions

Q: Is this card backward compatible with older InfiniBand generations?

A: Yes, it supports NDR, HDR, EDR, and earlier InfiniBand standards.

Q: Can I use this NIC for both Ethernet and InfiniBand simultaneously?

A: The card supports both protocols but operates in one mode per port at a time.

Q: Does it support VMware ESXi?

A: Yes, with SR-IOV support for virtualized environments.

Q: What cables are compatible?

A: It supports DAC, AOC, and optical transceivers for both InfiniBand and Ethernet.

Q: Is GPUDirect Storage supported?

A: Yes, it fully supports GPUDirect Storage and GPUDirect RDMA.

10. Precautions

- Ensure adequate airflow for thermal management. Maximum operating temperature is 85°C.

- Use only certified cables and transceivers to avoid link degradation.

- Verify PCIe slot compatibility (PCIe Gen5 x16 recommended).

- Update to the latest firmware and drivers for optimal performance and security.

- Comply with local EMC and safety regulations during installation.

11. Company Introduction

With over a decade of industry experience, our company operates a large-scale production facility and is supported by a robust technical team. We have built a solid reputation by serving a broad client base and delivering high-quality networking products at competitive prices.

We are authorized distributors for leading brands including NVIDIA Networking (Mellanox), Ruckus, Aruba, and Extreme Networks. Our inventory includes brand-new original equipment such as network switches, network cards, wireless access points, controllers, and cables.

We maintain over $10M in inventory to ensure prompt availability and bulk supply capabilities. Our dedicated customer service and technical support teams are available 24/7 to assist with product selection, configuration, and post-sale support.