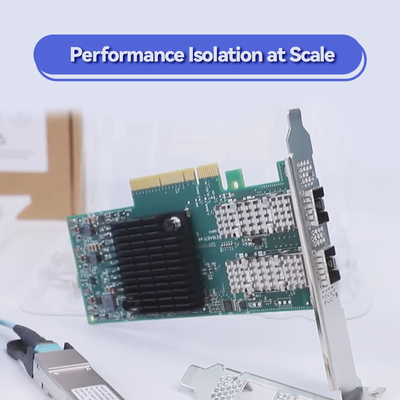

Dual Port Ethernet HDR 200GbE Mellanox Network Card VPI Adapter MCX653106A-HDAT ConnectX 6 QSFP56

Product Details:

| Brand Name: | Mellanox |

| Model Number: | MCX653106A-HDAT |

| Document: | connectx-6-infiniband.pdf |

Payment & Shipping Terms:

| Minimum Order Quantity: | 1pcs |

|---|---|

| Price: | Negotiate |

| Packaging Details: | outer box |

| Delivery Time: | Based on inventory |

| Payment Terms: | T/T |

| Supply Ability: | Supply by project/batch |

|

Detail Information |

|||

| Products Status: | Stock | Application: | Server |

|---|---|---|---|

| Interface Type:: | InfiniBand | Ports: | Dual |

| Max Speed: | 200GbE | Connector Type: | QSFP56 |

| Type: | Wired | Condition: | New And Original |

| Model: | MCX653106A-HDAT | ||

| Highlight: | HDR 200GbE Mellanox Network Card,Mellanox Network Card VPI Adapter,HDR 200GbE dual ethernet card |

||

Product Description

NVIDIA ConnectX-6 InfiniBand Adapter: High-Performance Networking Solution

The NVIDIA ConnectX-6 InfiniBand Smart Host Channel Adapter represents a breakthrough in data center networking technology. This advanced network interface card delivers exceptional bandwidth, ultra-low latency, and innovative in-network computing capabilities, making it ideal for demanding HPC, AI, and cloud environments. With dual-port 200Gb/s connectivity and comprehensive hardware offloads, the ConnectX-6 adapter significantly enhances application performance while reducing server overhead.

Distinguishing Characteristics

- Dual-port 200Gb/s InfiniBand and Ethernet connectivity

- Hardware-accelerated encryption with XTS-AES 256/512-bit block-level security

- NVIDIA In-Network Computing technology for enhanced scalability

- Comprehensive protocol offload including RDMA, NVMe over Fabrics, and TCP/UDP/IP

- Advanced virtualization support with SR-IOV and NVIDIA ASAP² technology

Advanced Technological Framework

The ConnectX-6 network adapter incorporates multiple groundbreaking technologies including end-to-end congestion control, hardware-based packet pacing with sub-nanosecond accuracy, and innovative memory management. It supports both InfiniBand and Ethernet protocols simultaneously, featuring hardware offloads for overlay networks (VXLAN, NVGRE, Geneve) and storage protocols (NVMe-oF, iSER, SRP).

Operational Methodology

This high-performance NIC card operates by offloading network processing functions from the host CPU to dedicated hardware engines. It utilizes Remote Direct Memory Access (RDMA) technology for direct memory-to-memory data transfer between systems, bypassing the operating system and significantly reducing latency. The adapter's innovative In-Network Computing architecture enables computation within the network fabric, further optimizing data movement and processing.

Implementation Environments

- High-performance computing clusters for scientific research and engineering simulations

- Artificial intelligence and deep learning training infrastructures

- Hyperscale cloud data centers and storage area networks

- Network function virtualization (NFV) and software-defined networking (SDN) environments

- High-frequency trading and financial modeling systems

Technical Specifications

| Specification Category | Detailed Parameters |

|---|---|

| Product Model | MCX653106A-HDAT |

| Data Rate | 200/100/50/40/25/10/1 Gb/s per port |

| Host Interface | PCIe 4.0 x16 (backward compatible with PCIe 3.0) |

| Physical Ports | 2 x QSFP56 cages |

| Protocol Support | InfiniBand, Ethernet, RoCE v2, iWARP |

| Security Features | Hardware-based XTS-AES 256/512 encryption, FIPS compliant |

| Virtualization | SR-IOV with up to 1000 virtual functions |

| Power Consumption | Typically under 25W maximum |

| Form Factor | Standard profile PCIe card with bracket |

| Operating Systems | Linux, Windows Server, VMware, FreeBSD |

Competitive Advantages

- Industry-leading message rate of 215 million messages per second

- Hardware-based block-level encryption without performance degradation

- Comprehensive offloads for networking, storage, and security functions

- Support for both InfiniBand and Ethernet environments on same hardware

- NVIDIA Socket Direct technology for optimized multi-socket server performance

Support and Services

We provide comprehensive technical support, including 24/7 customer consultation, firmware updates, and integration assistance. All products come with standard manufacturer warranty. Custom configuration services available for large-scale deployments, with flexible supply chain options to meet urgent requirements.

Frequently Asked Questions

Q: What is the difference between ConnectX-6 and previous generations?

A: The ConnectX-6 offers double the bandwidth (200Gb/s vs 100Gb/s), enhanced in-network computing capabilities, and hardware-based block-level encryption not available in earlier models.

Q: Can this adapter be used in Ethernet-only environments?

A: Yes, the ConnectX-6 supports both InfiniBand and Ethernet protocols, providing flexibility in various network environments.

Q: What cooling requirements does this card have?

A: The adapter requires adequate airflow for proper thermal management. For high-density deployments, consider optional versions with enhanced cooling solutions.

Installation Considerations

- Ensure proper PCIe slot alignment and secure mounting

- Verify compatible cable types (QSFP56/OSFP for 200Gb/s connectivity)

- Install latest firmware and drivers for full feature availability

- Maintain adequate airflow for thermal management

- Follow electrostatic discharge (ESD) protection procedures during handling

Company Profile

With over ten years of industry expertise, we maintain extensive manufacturing capabilities and a robust technical support organization. Our product portfolio includes original equipment from leading brands such as Mellanox (now NVIDIA Networking), Ruckus, Aruba, and Extreme Networks. We stock over 10 million units of network equipment, including switches, network interface cards, wireless solutions, and connectivity components. Our professional sales and engineering teams provide 24/7 consultation and technical support, ensuring reliable service for global customers.