00Gb/S To 2x100Gb/S Mellanox AOC IB HDR QSFP56 To 2 MFS1S50-H005E MFS1S50-H010E MFS1S50-H015V

Product Details:

| Brand Name: | Mellanox |

| Model Number: | MFS1S50-H010E |

| Document: | MFS1S50-H0xxV.pdf |

Payment & Shipping Terms:

| Minimum Order Quantity: | 1pcs |

|---|---|

| Price: | Negotiate |

| Packaging Details: | outer box |

| Delivery Time: | Based on inventory |

| Payment Terms: | T/T |

| Supply Ability: | Supply by project/batch |

|

Detail Information |

|||

| Availability: | Stock | Warranty: | 1 Year |

|---|---|---|---|

| Condition: | New And Original | Technology: | InfiniBand |

| Data Rate: | Up To 200Gb/s | Connector Type: | QSFP56 |

| Diameter: | 3 +/-0.2mm | Minimum Bend Radiu: | 30mm |

| Near-end Output Eye Height: | 70mVpp | Near-end ESMW: | 0.265UI |

| Highlight: | 40g qsfp+ aoc cable,Mellanox qsfp+ aoc cable,Mellanox 40g aoc cable |

||

Product Description

NVIDIA MFS1S50-H010E 200G to Dual 100G QSFP56 Active Optical Splitter Cable

Product Overview

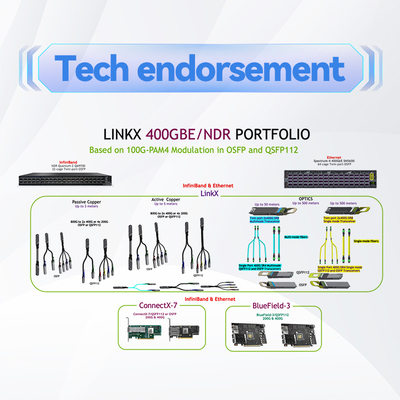

The NVIDIA® MFS1S50-H010E represents a cutting-edge optical fiber cable solution engineered for modern high-performance data centers. This innovative active optical splitter cable seamlessly converts a single 200Gb/s QSFP56 port into two independent 100Gb/s QSFP56 channels, delivering exceptional bandwidth flexibility without compromising signal integrity. Unlike conventional passive fiber cable options, this active solution incorporates advanced signal processing to maintain optimal performance across its 10-meter reach, making it ideal for connecting high-speed switches to multiple servers in demanding computing environments.

Key Features

- Bi-directional 200G to 2x100G conversion capability

- Ultra-low latency design for high-frequency applications

- 4×50G PAM4 modulation technology

- Compliant with InfiniBand HDR and 200GbE standards

- Integrated Digital Diagnostic Monitoring (DDM) interface

- Hot-pluggable QSFP56 form factor

- RoHS compliant and energy efficient design

Technical Specifications

| Parameter | Specification |

|---|---|

| Data Rate | 200Gb/s to 2×100Gb/s |

| Interface | QSFP56 to 2x QSFP56 |

| Max Distance | 30 meters (MMF) |

| Power Consumption | 4.5W (200G end), 3.0W (100G end) |

| Cable Diameter | 3.0±0.2mm |

| Operating Temperature | 0°C to 70°C |

Technology & Compliance

This advanced optical fiber cable solution implements VCSEL-based optical technology and complies with SFF-8665 (QSFP56), SFF-8636 (I²C management), and IBTA InfiniBand HDR standards. The integrated EEPROM provides comprehensive product information and status monitoring, while the programmable Rx output amplitude and pre-emphasis ensure signal integrity across various deployment scenarios. This high-performance passive fiber cable alternative offers superior reliability for critical data center applications.

Applications

- High-performance computing clusters

- Cloud data center interconnects

- AI and machine learning infrastructure

- Financial trading networks

- Enterprise storage area networks

- High-frequency trading systems

Performance Advantages

The MFS1S50-H010V offers significant advantages over traditional connectivity solutions. Its active design provides better signal integrity than standard optical fiber cable options, while the splitter functionality reduces hardware requirements and simplifies network architecture. The cable's low power consumption and compliance with modern data center efficiency standards make it an environmentally conscious choice for expanding network capabilities without increasing energy costs.

Support & Services

We provide comprehensive technical support and customer service 24/7. All products undergo rigorous quality testing and come with full warranty protection. Our global logistics network ensures timely delivery, and our technical team offers integration assistance for large-scale deployments. Custom length options are available for volume orders, providing flexibility for specialized infrastructure requirements.

Frequently Asked Questions

Q: What is the difference between this and a standard passive fiber cable?

A: This active optical cable includes signal processing electronics that maintain signal integrity over longer distances compared to passive solutions, while also providing diagnostic monitoring capabilities.

Q: Can I use this cable for both InfiniBand and Ethernet applications?

A: Yes, the cable supports both InfiniBand HDR and 200GbE protocols, making it versatile for different network environments.

Q: What is the maximum bend radius for this optical fiber cable?

A: The minimum bend radius is 30mm to prevent damage to the internal fibers and maintain optimal performance.

Q: Does this solution replace traditional passive fiber cable configurations?

A: Yes, it provides an active alternative to passive fiber cable setups with enhanced signal integrity and diagnostic capabilities.

Installation Guidelines

- Always use protective dust caps when connectors are not in use

- Clean fiber connectors before installation to prevent contamination

- Avoid bending the cable beyond the specified minimum radius

- Follow proper ESD precautions during handling

- Ensure host system compatibility before deployment

- Verify operating temperature ranges for your environment

Company Profile

With over ten years of industry expertise, we operate extensive manufacturing facilities supported by a skilled technical team. Our company has established a strong reputation for delivering high-quality networking solutions to a global clientele. We maintain partnerships with leading technology brands including Mellanox, Ruckus, Aruba, and Extreme, offering authentic new networking equipment including switches, adapters, wireless access points, controllers, and connectivity solutions. Our $10 million inventory ensures ready availability of products for both small and large-scale deployments, backed by 24/7 customer support and technical consultation services. Our dedicated sales and engineering teams have earned international recognition for reliability and customer satisfaction.