OEM QSFP28 EDR 100GbE Mellanox Network Card MCX556A-ECAT ConnectX-5

Product Details:

| Brand Name: | Mellanox |

| Model Number: | MCX556A-ECAT |

| Document: | CONNECTX-5 infiniband.pdf |

Payment & Shipping Terms:

| Minimum Order Quantity: | 1pcs |

|---|---|

| Price: | Negotiate |

| Packaging Details: | outer box |

| Delivery Time: | Based on inventory |

| Payment Terms: | T/T |

| Supply Ability: | Supply by project/batch |

|

Detail Information |

|||

| Products Status: | Stock | Application: | Server |

|---|---|---|---|

| Interface Type:: | InfiniBand | Ports: | Dual |

| Max Speed: | 100GbE | Connector Type: | QSFP28 |

| Type: | Wired | Condition: | New And Original |

| Warranty Time: | 1 Year | ||

| Highlight: | OEM QSFP28 Mellanox Network Card,EDR 100GbE Mellanox Network Card,MCX556A-ECAT Network Card |

||

Product Description

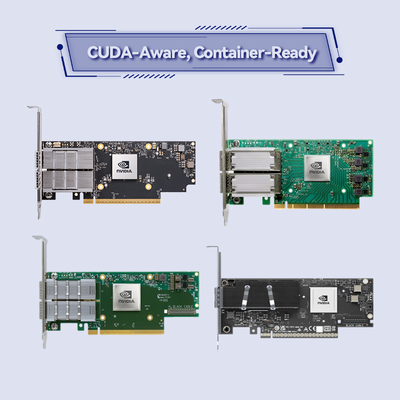

NVIDIA ConnectX-5 InfiniBand Adapter Card - 100Gb/s Speed with Advanced RDMA Technology

MCX556A-ECAT High-Performance Networking Solution

The NVIDIA ConnectX-5 InfiniBand adapter card represents a significant leap in network technology, delivering exceptional performance with dual-port 100Gb/s connectivity. This advanced network card combines ultra-low latency, high message rates, and innovative offload capabilities that make it ideal for demanding HPC, cloud, and storage environments. With its embedded PCIe switch and comprehensive virtualization support, this NIC card provides unparalleled efficiency for modern data centers.

MCX556A-ECAT Key Advantages:

- Dual-port 100Gb/s InfiniBand and Ethernet connectivity

- Ultra-low latency and high message rate performance

- Hardware offloads for NVMe over Fabrics and storage protocols

- Advanced RDMA capabilities with GPU Direct support

- PCIe Gen4 compatibility for maximum throughput

Product Overview

The ConnectX-5 InfiniBand adapter from NVIDIA is a high-performance network interface card designed for next-generation data centers and high-performance computing environments. This advanced NIC card supports both InfiniBand and Ethernet protocols, offering flexibility without compromising performance. With its innovative architecture, the ConnectX-5 delivers exceptional bandwidth while significantly reducing CPU overhead through sophisticated offload engines.

MCX556A-ECAT Key Features

High-Speed Connectivity

Dual-port design supporting up to 100Gb/s per port with backward compatibility to lower speeds

Advanced Offload Technology

Hardware offloads for NVMe over Fabrics, MPI tag matching, and rendezvous protocols

Virtualization Support

SR-IOV with up to 512 virtual functions and NVIDIA ASAP² technology

RDMA Optimization

Enhanced RDMA capabilities with GPU Direct for accelerated communication

MCX556A-ECAT Technical Specifications

| Specification | Details |

|---|---|

| Interface | PCIe Gen3/Gen4 x16 |

| Data Rate | Up to 100Gb/s per port |

| Ports | Single or dual QSFP28 |

| Protocols | InfiniBand, Ethernet (100/50/40/25/10/1GbE) |

| RDMA Support | Yes, with RoCE |

| Virtualization | SR-IOV, 512 VFs |

| Offloads | NVMe-oF, MPI, Checksum, TSO |

| OS Support | Linux, Windows, VMware, FreeBSD |

Applications

The ConnectX-5 network card excels in various high-demand environments:

- High-Performance Computing (HPC): MPI and SHMEM/PGAS offloads, adaptive routing, and out-of-order RDMA operations

- Cloud Data Centers: NVMe over Fabrics offloads, storage protocol acceleration, and virtualization support

- Artificial Intelligence/Machine Learning: GPU Direct RDMA for accelerated data transfer between GPUs

- Storage Systems: T10 DIF signature handoff, SRP, iSER, and NVMe-oF target offloads

Product Selection Guide

| Form Factor | Port Configuration | Interface | Part Number |

|---|---|---|---|

| PCIe HHHL | Single-port QSFP28 | PCIe Gen3 x16 | MCX555A-ECAT |

| PCIe HHHL | Dual-port QSFP28 | PCIe Gen3 x16 | MCX556A-ECAT |

| PCIe HHHL | Dual-port QSFP28 | PCIe Gen4 x16 | MCX556A-EDAT |

| OCP 2.0 Type 1 | Single-port QSFP28 | PCIe Gen3 x16 | MCX545B-ECAN |

| OCP 2.0 Type 2 | Single-port QSFP28 | PCIe Gen3 x16 | MCX545A-ECAN |

Frequently Asked Questions

What is the difference between ConnectX-5 and ConnectX-6?

While both are high-performance network cards, ConnectX-6 offers higher throughput (200Gb/s) and enhanced security features compared to ConnectX-5's 100Gb/s capability. However, the ConnectX-5 remains an excellent choice for most enterprise and HPC applications with its proven reliability and comprehensive feature set.

Does this NIC card support both InfiniBand and Ethernet?

Yes, the ConnectX-5 adapter card supports both InfiniBand and Ethernet protocols, offering flexibility for different network environments. The same physical hardware can be configured for either protocol through firmware settings.

What operating systems are supported?

The ConnectX-5 network card supports a wide range of operating systems including RHEL/CentOS, Windows Server, FreeBSD, VMware ESXi, and various Linux distributions through the OpenFabrics Enterprise Distribution (OFED).

Compatibility Notes

- Ensure your system has an appropriate PCIe x16 slot (Gen3 or Gen4) for optimal performance

- For Ethernet operation at speeds below 100GbE, appropriate adapters may be required

- When using NVIDIA Socket Direct technology in virtualization scenarios, certain restrictions may apply

- Verify compatible cable types (passive copper, active optical) for your specific deployment

- Check firmware and driver requirements for specific offload capabilities

Company Introduction

With over a decade of industry experience, our company has established itself as a leading provider of networking solutions. We operate extensive manufacturing facilities and maintain a robust technical team that supports our growing customer base. Our organization has built a strong reputation for delivering high-quality products at competitive prices while providing exceptional service.